Going beyond transparency

This piece was written by PI voluteer Natalie Chyi.

Transparency is necessary to ensure that those in power – including governments and companies – are not able to operate in the dark, away from publicscrutiny. That’s why calls for more transparency are routine by everyone from civil society and journalists to politicians.

The bigger picture is often lost when transparency is posed as the only solution to shadowy state and corporate powers. For one, the term is so broadly understood that it is used by companies to describe any disclosure of information not previously available to users, even if the information provided is incomplete or misleading. And while increased transparency remains vital, on its own, it does not give people enough practical power to effect change and hold institutions to account. And transparency assumes that the increased access to information allows people to suddenly be able to meaningfully exercise control over that information.

Of course, privacy notices and being able to access the information companies hold on us is incredibly important. And this sort of access is vital to illustrating how companies use data.

However transparency needs to be accompanied by measures that strengthen peoples’ capacity to act upon the available information, and the onus needs to be put back on companies by requiring them to include privacy by design technical and operational practices as systems are being designed, rather than as an afterthought.

The limits of transparency

Under EU data protection legislation, people have a right of access to the information companies have about them. Among other things, users have the right to obtain a copy of their personal data, a list of recipients to whom their personal data is disclosed, the duration to which companies retain their data, as well as information about the existence of automated decision-making (including profiling).

But PI argues the data that companies make available to users today often does not satisfy the requirements of right of access under the GDPR, nor does it include the scope of data PI believes companies should provide.

For example, there is a lot of information missing from Facebook’s Download Your Data tool. This is generally information users may have given Facebook access to unknowingly, like data collected from their online browsing or information collected about the apps they use.

Data-intensive companies, including consumer-facing platforms, also infer information about their users. These inferences can be combined with data from other data companies to create even more detailed profiles of people. This stands contrary to how most users understand privacy and the extent of the information that companies hold on them - that is, information that they knowingly and overtly disclose.

One example of this kind of inferred information is the “Elo score” that Tinder’s algorithm calculates for all its users, ranking their desirability based on other users’ swiping activity on their profile. Even though this score hugely affects a user’s experience on Tinder, this score is not included in the information provided from Tinder’s Download My Data tool. Tinder also doesn’t give any information about retention period, or third parties who have access to this data.

It is troubling that this is what transparency today looks like. When companies only feed users back the information that they have knowingly provided and keep them in the dark about how they are being profiled and how their profiles affect them, it is impossible for people to grasp the wider potential harms of sharing their data with these platforms.

An unfair burden

Even if we were given access to all the information that companies have generated about us, such transparency would still not be enough for people to easily do something about it, nor would the wider problem be resolved.

Firstly, in order for users to actually gain this knowledge, they must read a privacy policy that contains vast quantities of information conveyed in legal and technical terms. These documents are often hard for users to make sense of, due to their complexity. It is also worth noting that just because information is available doesn’t mean that people will necessarily be able to interpret it, and it is usually society’s most privileged that are more equipped to make sense of and use the information that they have access to.

These policies are also time consuming to go through. In fact, researchers found that reading all the privacy policies you encounter in a year would take 76 work days. And with the GDPR, which asks for companies to disclose more information at a higher level of detail, it is not likely that these privacy policies are going to get any shorter. Even though the new GDPR-compliant privacy policies are clearly formatted and conveyed in plain language, they are still time consuming to read. Twitter’s is 12 pages long, Instagram’s is 11 pages, Netflix is at 9 pages. Reddit’s comes in shortest at 6 pages, but this still takes an estimated 13 minutes to read, and probably longer to actually digest.

Aside from the issue of time, another issue is the lack of measures that allow people to act practically upon the available information. There is little incentive for users to read such dense privacy policies, especially on their phones, when they don’t have the power to negotiate something better.

In one example, PayPal recently released a list of 600+ third parties that users’ personal information may be shared with. So PayPal users can now look at the 98-page list and see what companies their information is shared with, and for what reason. They realise that their information is being shared with data brokers, and they’re unhappy about it. What are they supposed to do then?

Even with the information out in the open, the problem still exists, and users have very limited options to respond. In most cases, they can utilise a small selection of opt-outs available, which may not do much to help.

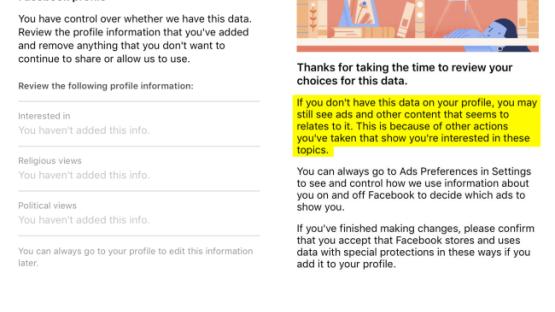

For example, it is now possible for users to opt out of providing sensitive personal data (like sexuality or religion) to Facebook. However, they state that even if you choose not to share this information with them, the company may still infer it based on the other data they have on you. Thus, whether or not you choose to share your sexuality, Facebook may still place you in either “interested in men” or “interested in women” category to be targeted for ads. (And this brings up the issues involved with being mis-profiled, namely that whether or not the inferences are true, it is treated as fact and can influence the options that are available to you).

Ultimately, the approach is a take it or leave it one of forced consent. If user don’t agree with the options available to them, the only solution would be to stop using the service completely, as this screenshot from Twitter shows.

Without mechanisms for users to act upon the available information, it is questionable how much practical difference this transparency makes for people.

And even if a user chooses to adopt one of these solutions (ie. opt out or account deletion) to protect their personal privacy, that action doesn’t change the fact that the default for all other users is still set to share. And this goes to the crux of the problem: transparency doesn’t actually fix the underlying problematic practices (ie. secret data sharing, surveillance, profiling, discrimination) that are being exposed. There is a systemic problem that cannot be completely solved simply with more extensive notice and control to people, because platform architectures are built in a way that inherently maximises frictionless data collection. The design of these platforms reflect their billion dollar business models of collecting, using, and selling user data. In the face of this, transparency alone has simply made the problem visible, without doing anything to remedy it.

What needs to be done?

Transparency is a necessary precondition to identifying and challenging inaccuracies and misuse of information, as well as non-compliance with data protection law. Without it, it is difficult for people to know where they stand. PI believes that all users should know when and how their personal data is being generated, collected, and used by companies (and governments). People should have access to the data unknowingly collected from their devices and use of services (including device-level data), in both raw and meaningful forms. People should also have full access to their data profile, including all derived data, as well as the categories they have been assigned. Along with this, they should be alerted to when their online or offline experiences are being shaped by their data profile, from targeted advertising to news to access to services.

But it should also be clear now that information alone will not empower people to the extent necessary. Even if companies operate under full transparency, data can still be used in ways that people cannot even imagine to define and manipulate their lives. And in the end, transparency simply shifts the work from the platform to the average user, who has neither the time nor the ability to properly hold these companies to account. To prevent this, it is no longer acceptable just to push for more transparency as the solution.

PI believes that effective data protection is first and foremost a matter of design, and should therefore be built in to platforms from the beginning. Data intensive companies need to engage in privacy by design, which is an obligation under the GDPR. This includes:

- Full functionality of services without a privacy trade-off, as users should not have to choose between giving up their data and using a service.

- Restraints on potential abuses arising from the vast accumulation of data, through:

- Data minimisation, as less data generation and processing means that less data that can be misused or breached. A good example of this is the messaging app Signal, which only records when an account is created and when the user last connected to Signal servers. With an aim to gather as little information as possible, they do not collect any metadata, and encrypt all its user’s conversations. End-to-end encryption as the default in devices, networks and platforms for data in-transit and at-rest is a highly recommended measure.

- Purpose limitation. Data should only be gathered and processed for specified, explicit, and legitimate purposes, such as the provision of a particular function. This would impose limits on how data is used to construct profiles and to make decisions about people, groups, and societies.

- Storage limitation, or a limited retention period of data gathered.

- Increased emphasis on security, in order to minimize risk of an insecure, unpatched, and unmaintained system leaving users’ devices and data vulnerable. This has implications especially for more users in more marginalized communities. Manufacturers and vendors need to be held responsible for the security in products they manufacture and sell, throughout a clearly identified period. Additionally, there need to be timely fixes in security, including updates, patches, and workarounds

We also believe that additional regulation is needed, in that loopholes and exemptions in data protection law around profiling must be closed. Currently, not all data protection laws recognise the use of automated processing to derive, infer, predict or evaluate aspects about an individual. Data protection principles need to apply equally to inferences, insights, and intelligence that is produced. People should have as much control over their data profiles as they do to information that they knowingly provide to companies, including the right of erasure and rectification. People should be able to object and shape their profiles – If a user declines to share their religious or political views with Facebook but Facebook infers it and includes that in their data profile, the user should be able to delete that inference.

In addition to data protection laws, sectoral regulation and strong ethical frameworks should guide the implementation, application and oversight of automated decision-making systems. When profiling generates insights or when automation is used to make decisions about people, users as well as regulators should be able to determine how a decision has been made, and whether the regular use of these systems violates existing laws, particularly regarding discrimination, privacy, and data protection.