Through An App, Darkly: How Companies Construct Our Financial Identity

Privacy International is celebrating Data Privacy Week, where we’ll be talking about privacy and issues related to control, data protection, surveillance and identity. Join the conversation on Twitter using #dataprivacyweek.

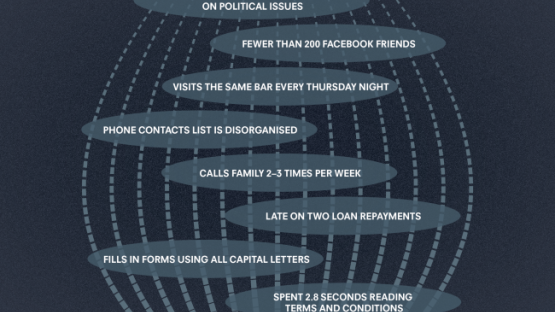

If you were looking for a loan, what kind of information would you be happy with the lender using to make the decision? You might expect data about your earnings, or whether you’ve repaid a loan before. But, in the changing financial sector, we are seeing more and more sources of data being used. “All data is credit data”, as the CEO of ZestFinance famously said. As well as data from credit reference agencies and data brokers, this includes data from how you use their website, from social media, or from mobile apps.

This isn’t just data about your financial standing. It’s data about your social circle — did your friends repay their loans? — and even your state-of-mind: did you read the terms and conditions on the website, and if so for how long? One of the great lies of the financial services space is that this is just boring, dry financial data. It’s not, they are making use of some of the most intimate and personal information about us.

What is done with this data? It’s used to profile and judge us, to make decisions about our financial lives. But this goes way beyond conventional credit scoring in the scope of what they can know about us. The machine learning that goes into these decisions throws up decisions based of factors that seem utterly bizarre: Do entries in your phonebook include surnames? How often do you call your mother?

Furthermore, the risks of discrimination emerging from this are real and pressing: the data that is collected and produced from that collection says a lot about us, so serious consideration has to be given to how these algorithms can prevent discrimination against particular groups or individuals.

But we also have to realise how this data changes our behaviour. A credit score not only measures, it also alters behaviour. Many of us are familiar with attempting to ‘build’ our traditional credit file by taking out a credit card. But, with the breadth of data that’s gathered in the fintech industry, that change in behaviour can go much deeper. It can change the way we post on social media, and even which friends we keep in contact with. We see in China, with its pilots of a “social credit” system, how the ability to get credit can be used to manipulate our behaviour.

Companies expect us to trust them more and more. We’re letting them have access to more and more data about ourselves, to analyse in ways that we don’t understand, creating data we don’t have access to, and in some cases in jurisdictions outside our control. We’re expected to trust them to keep all of this secure: a trust that is frequently misguided, as breaches in Equifax and Cash Converters have recently made clear.

In many cases, we’ve given companies “consent” to access all of this data, usually in an “all or nothing” checkbox. But that’s far from an adequate protection. Particularly for those in a vulnerable position for whom the only source of credit is in the alternative credit scoring sector, people often have no choice but to accept the terms and conditions.

We must look to ways to broaden the choice that people have in the financial services they can access, but our starting point should not be that their data is collected and analysed without any protections. The human right to privacy must be an essential element of fintech, which is why Privacy International is calling upon fintechs, regulators and governments to take the privacy consequences of fintech seriously. We need the regulations in place to ensure that data is used to empower customers, not exclude or surveil. But we also need fintechs themselves to recognise the ethical consequences of their use of data, and to no longer treat customer’s personal data as a simple commodity to be traded.

As traditional financial institutions rush to catch up with the startups, we’re heading towards a scenario where it may become impossible to access a financial product without allowing access to every last bit of information about ourselves. We should be looking towards a different future for the financial sector, one in which technology is used to protect our privacy rather than abuse it.

Read our report on Fintech: Privacy and Identity in the New Data-Intensive Financial Sector.