Search

Content type: Explainer

Behind every machine is a human person who makes the cogs in that machine turn - there's the developer who builds (codes) the machine, the human evaluators who assess the basic machine's performance, even the people who build the physical parts for the machine. In the case of large language models (LLMs) powering your AI systems, this 'human person' is the invisible data labellers from all over the world who are manually annotating datasets that train the machine to recognise what is the colour…

Content type: Examples

Companies like the Australian data services company Appen are part of a vast, hidden industry of low-paid workers in some of the globe's cheapest labour markets who label images, video, and text to provide the datasets used to train the algorithms that power new bots. Appen, which has 1 million contributors, includes among its clients Amazon, Microsoft, Google, and Meta. According to Grand View Research, the global data collection and labelling market was valued at $2.22 billion in 2022 and is…

Content type: Examples

Four people in Kenya have filed a petition calling on the government to investigate conditions for contractors reviewing the content used to train large language models such as OpenAI's ChatGPT. They allege that these are exploitative and have left some former contractors traumatized. The petition relates to a contract between OpenAI and data annotation services company Sama. Content moderation is necessary because LLM algorithms must be trained to recognise prompts that would generate harmful…

Content type: Long Read

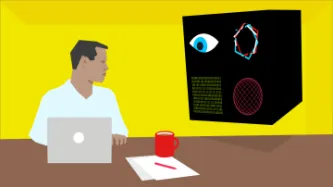

Imagine your performance at work was assessed directly from the amount of e-mails sent, the amount of time consumed editing a document, or the time spent in meetings or even moving your mouse. This may sound ludicrous but your boss might be doing exactly that. There are more and more stories emerging of people being called into meetings to justify gaps in their work only to find out their boss had been watching them work without their knowledge.

The Covid-19 global pandemic has reshuffled the…

Content type: Examples

As working from home expands, employers are ramping up surveillance using the features built into software such as Microsoft Teams and Slack, which report when employees are active, or requiring employees to attend early-morning video conferences with webcams switched on. In early 2020, PwC developed a facial recognition tool to log when employees are away from their home computer screens.

Source: https://www.theguardian.com/world/2020/sep/27/shirking-from-home-staff-feel-the-heat-as-…