Algorithms, Intelligence, and Learning Oh My

Tech firms and governments are keen to use algorithms and AI, everywhere. We urgently need to understand what algorithms, intelligence, and machine learning actually are so that we can disentangle the optimism from the hype. It will also ensure that we come up with meaningful responses and ultimately protections and safeguards.

Many technologists emerge from University, College or graduate courses with the impression that technology is neutral and believe that all systems they apply their expertise in developing will also be completely neutral. From the outside looking in, society does not by nature have a similarly optimistic impression of technology.

Just like Dorothy begins her journey on the road paved with gold, she is fearful of lions, tigers and bears, initially many see technology as something to be fearful of — and that has been the first reaction of many around algorithms when they entered the popular scene a few years ago. However, when she meets the lion, she realises that he is not so scary but is misunderstood. Artificial Intelligence and Machine Learning are now following a similar path — we start with fear, and then move to acceptance and optimism. We need to dislodge this from the public view, but also from the understanding of technologists who quickly become the cheerleaders.

Apocalypse Now (Or in the future)

From the apocalyptic movie franchises of the 1980s to the science fiction writers well before them, the notoriety of technology in the future has been inescapable. However, at some point the mythology gave way and a more sober and accurate perception of technology emerged, which was helped along as much by its failures as its successes. The aptly name Blue Screen of Death was symbolic of unreliability that replaced the techno-optimism that was emerging. Just because we don’t see BSODs as frequently as we used to, doesn’t mean things aren’t failing. And just because our technology doesn’t look like HAL or The Terminator doesn’t mean it can’t harm us.

Nobody was going to give up on computers, however, because most practitioners and the industry had a very different vision of the future, one where devices augmented reality and complemented it. The idea of a device on every desk, became a device in every brief case to a device in every pocket. And now we have the vision of a device in every thing. Constant, real-time sensing, processing, transmission and actuation will configure our environment exactly as we want just as we want it. But what if our crystal ball says things should be configured differently for me than you? Who or what decides which configuration wins? What if resources cannot fulfil everyones desires, who gets left out and why? These are the critical questions for today and tomorrow.

On top of computing being increasingly everywhere, the industry is keen to use it to generate more data us on all. Every year companies spend millions on the generation of more data in the hopes that it will help them to make sense of the data they already have. And when that fails, the answer is .. you guessed it - more data. Its reminiscent of the Simpsons quote: “Dig up stupid”. This approach is far from harmless because some of this data is highly revelatory of people it is generated about. And not necessarily used for the original purpose intended. And in order to make “sense” of the data, cheap and available processing power has been thrown at the problem. But it has also been thrown at other problems that we are not necessarily aware our data was being used to solve.

Algorithms

To complement the data deluge, algorithms became the new focus because the amount of data now being generated was enormous. Humans could no longer handle the data overload and looked to automated processing. The term algorithm (and Artificial Intelligence and Machine Learning for that matter) has been used in many contexts to mean different things, however, and we need some clarity, urgently. Here are some definitions of algorithms from leading computer science textbooks:

"Conventional programs process data using algorithms, or in other words, a series of well-defined step-by-step operations. An algorithm always performs the same operations in the same order, and it always provides an exact solution. Conventional programs do not make mistakes – but programmers sometimes do." - Artificial Intelligence: A Guide to Intelligent System 2nd Edition, M Negnevitsky, 2005.

"[conventional programmes] Process data and use algorithms, a series of well-defined operations, to solve general numerical problems." - Artificial Intelligence: A Guide to Intelligent System 2nd Edition, M Negnevitsky, 2005.

There is no question that we are all subjected to algorithms in our daily lives. Whether its the setting on our toaster in the morning that triggers after a specified time or some of the algorithms that lie beneath the internet to permit you to read this article right now. As the data grows, the algorithms get more and more data about our personal lives and they are increasingly being trusted with greater control over society. In accepting this new reality and future, there is an admirable drive to improve fairness, transparency and accountability in modern use of algorithms as applied to the vast quantities of data.

But just as, beyond the theory, our governance systems struggle with the concepts of fairness, transparency, and accountability, can we expect technology to solve the problem anew. Ideally we would be able to map the legal systems’ protections of fairness, justice, and equity into machines. But the challenge is that we just presume it can be done, when the reality is far different.

As Propublica’s study into sentencing technology showed, the judiciary’s discrimination problems were not eradicated by the use of algorithms. Yet we magically believe that fairness can be designed in, and discrimination eradicated from our systems. This is a recipe for disaster. Just as we have learned to question the discrimination in the legal system, we must learn to see how something similar exhibits itself in our technology. Both are elusive. We must take a different approach to both and both must operate in tandem.

Its as if people take the final scene in the Wizard of Oz literally, that intelligence can be imbued just by giving a diploma as with the scarecrow. We cannot give a machine compassion or a heart by installing a heart shaped ticking clock in it like with the tin man. As we have learned, just because we want to believe the legal system is fair and justice is blind, it doesn’t mean it is. It is through openness at all levels and internal checks that we can have confidence in the system.

Policy makers rely on these guarantees because in theory they can interrogate and sit in on proceedings. But as will be demonstrated below, this paradigm can not apply in the modern world of data driven learning and intelligence. We give more and more excessive data to machines in the hope that magic will happen and the computer will be a fairer decision-maker. The uncomfortable truth about the future that policy-makers and technologists must understand is that magic doesn’t exist.

If we are to embrace technological progress to the fullest and safest way possible, the solution runs much deeper. It requires systemic change around data generation, collection, analysis, and sharing. We cannot expect to use the technology of today with the laws of many years ago to adequately protect us. Laws are constantly being stretched beyond their original purpose. But the same is true of technology. The solution requires openness and honesty about all aspects of the systems we are being subjected to. A new approach must be taken because yesterday’s laws and technologies presumed that people came to the technology at their own choosing. Now technology is penetrating societies whether they like it or not, and the laws governing how these technologies function are beyond the reach of the people. We must rethink things on this basis, where the struggle to disconnect becomes harder and harder.

And this all presumes everything goes according to plan and there are no bad actors out there. Our systems must always be able to withstand the best, brightest and most imaginative of attacks. We must attack the systems and fix them before someone else does. Again, and surprisingly, we aren’t thinking about the future in this way yet.

How it really works — Do It Yourself Fairness

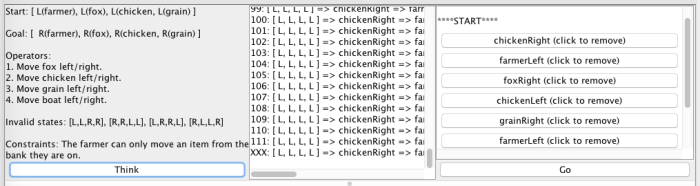

Try building your own algorithm for a farmer to transport a fox, chicken and grain safely across a river subject to some constraints. You are the farmer in this ancient problem and have to figure out how you will accomplish the task. The video below shows one possible algorithm to solve the problem. Have you built a fair algorithm? If a machine did the same thing as you, would it be acting fairly? We will come back to this question later on.

This is of course a metaphor: in this system, and with this algorithm, people are the items on the left bank at the mercy of whatever the farmer decides to do to bring them back and forth to safety on the right bank. Whether it is a border point, credit-scoring, or a sentencing system, some people are going to be prioritised by the algorithm.

Artificial Intelligence

Now we are looking to AI to solve our problems. Again, the presumption is that the world is not fair and humans are biased and rather AI can be designed to be better. But any system that learns based on what we do will inevitably learn to be as unfair and biased as humans. Unless someone would like to write the equation of fairness or justice how are we supposed to know fairness when we see it?

How do we evaluate the performance of any system in this space and how can any system claim to be accurate? If accuracy is how well it mimics our behaviour then it is mimicking bias. If it doesn’t match our behaviour but some other standard then what is that and can I see it please? We are back to the problem of not being able to ensure fairness in human systems and therefore we cannot expect a machine to deliver it.

When slick government and company PR departments talk about the accuracy of their systems, they revel in the incompleteness of that sentence - accurate compared to what? A human? But we don’t care as long as we hear that accuracy is somewhere in the 90-95% range, we can assume that all is well. So long as a predictive policing system claims to be "twice as accurate as those made by vet cops", we presume everything is fine.

This complacency is as much about advertising half truths as it is about impenetrable jargon. We again need to disentangle the jargon. Let’s go again to the textbooks. They refer to intelligent systems as:

The human mental process is internal, and it is too complex to be represented as an algorithm. However, most experts are capable of expressing their knowledge in the form of rules for problem solving. - Artificial Intelligence: A Guide to Intelligent System 2nd Edition, M Negnevitsky, 2005.

Unlike conventional programs, expert systems do not follow a prescribed sequence of steps. They permit inexact reasoning and can deal with incomplete, uncertain and fuzzy data. - Artificial Intelligence: A Guide to Intelligent System 2nd Edition, M Negnevitsky, 2005.

Intelligent systems are employed where we cannot understand a problem well enough to specify it as an algorithm. This needs repeating: they are employed where we can’t write an algorithm so we don’t know what the right steps are. However, we can know certain actions we would like to be taken but not the order. In essence, an intelligent system builds the algorithm for us. There is no guarantee that the same algorithm will ever be created again. So this is the problem if we argue for accountability, transparency and fairness of ’the algorithm’ — there isn’t necessarily a single algorithm and they are emergent. As we shall see in the example below, many factors, including order or possible actions, impact the outcome and so fairness is as much about those factors as it is about the result.

If we take our farmer example again, the farmer can try every move in his head, and then every move after that, and so on until he reaches the goal state.

The first box shows the invalid states. The second box shows all the possibilities the farmer is considering while the last one is the algorithm. This will produce the same solution as the algorithm above. But even in this simplistic example, the order of the operations is important. At every step, the farmer considers what he can do with the fox, then the chicken and finally the grain. Accordingly, in any solution, the fox is likely to reach the other bank before the grain. However, if we reverse the order, the grain will likely get to the other bank first. Small variations can make a big difference if you are at the back of the line to just be considered for crossing the river to safety. Is there an order of consideration that is fair?

In our example, we tell the farmer what options are available to him and also what he cannot do. If one can control the invalid states of the farmer, one can ensure that you make it across the river first. Or in a more modern example, that white people may pay less than everyone else for access to some service. By simply stating that white people paying more than anyone else is an invalid state, the farmer continues along blindly according to the rules of the system. And things can be much more subtle than this. But the jump from algorithms to intelligence that introduced this element of indirect control can still be accomplished if we let the system figure out the negative states for itself.

Machine Learning

What if we know even less about the problem? What if we don’t know what the invalid states are or what our operators are? The next trend is to use Machine Learning. Machine learning uses some feedback mechanism to help the reasoner (system acting intelligently) learn something either positive or negative about its actions. Or more precisely, to learn the consequences of its actions. The key question here is who controls the experience and feedback mechanism? This augments the reasoner with learning capabilities. Again, let’s look at how this is managed by the textbooks:

In general, machine learning involves adaptive mechanisms that enable computers to learn from experience, learn by example and learn by analogy. Learning capabilities can improve the performance of an intelligent system over time. Machine learning mechanisms form the basis for adaptive systems. - Artificial Intelligence: A Guide to Intelligent System 2nd Edition, M Negnevitsky, 2005.

But there is an implicit trial and error occurring here. The learner can try to come up with a plan of action and begin executing that algorithm. When something negative or positive occurs, the learner learns something from the state, the reasoner thinks again about the problem with this new piece of knowledge to come up with a new plan of action. And this continues until the problem is solved or the machine makes too many mistakes.

Bringing the fox first, in our example, means sacrificing the grain. And what is the farmer supposed to learn from this? On the one hand, he could learn that only this configuration is bad. This might be a naive inference — like learning how a child burns himself on a stove, it would be silly to think that only that stove will burn you. At the other extreme, the farmer might see that the fox can never be on the right bank. Not only is this too general an inference, it will also prevent the farmer from completing his task because the goal is to get the fox to the right hand side. The learned invalid states are in the box on the right, above the animation.

For completeness, you can also see how the learner operates when considering the grain before the fox.

The dilemma here is as much philosophical as it is technical but the machine can’t sit around doing a PhD - the machine’s job is to try things out to come to a solution, any solution. Remember people are the items on the left, looking to be transported safely to the right hand side. We make the sacrifice, whether we are aware of it or not, for the system to learn. But did you sign up to be fodder for an experiment?

Did you sign up to have a company learn about you to then take that knowledge and monetise it? Maybe you did, or maybe you just wanted to visit the hospital for urgent care or sign up for a social media account and now you and your data are being exploited.

Witchcraft

And the magic potions and witchcraft does not stop there. Recently Facebook and Admiral, a UK based car insurance company, teamed up to roll out cheaper car insurance for its customers based on their social media profile. What does a social media profile have to do with your insurance risk? Apparently if you use vague words like tonight as opposed to referring to a definite time, then you are indecisive. However, being over-confident through the use of exclamation marks will count against you.

Just like the algorithm, the well-defined sequence of steps you are subjected to will likely never exist again, so too are the parts of the process around intelligence and learning. Anyone who has put the textbook knowledge into practice knows that for most (if not all) complex and distributed systems one of the main difficulties is time. Things do not happen instantly. It takes time for information to be learned and for reasoners to plan and begin executing the algorithm before re-evaluatiing it. It also takes time for information to propagate into and through through the system and with data centres on different continents, how can anybody probe the mental state of the reasoner or learner? How do we know when or where to probe to test for fairness?

Lets put this into perspective. In a distributed intelligent system that learns, there are many factors that impact the outcome of the decision. Even with something that looks completely fair on paper, implementation can introduce unfairness due to the inescapable lags or latency it takes for things to be generated, collected, analysed and transmitted.

We have not passed the point of no return but we must ensure that yardstick by which we measure the systems we are subjected to is open and understandable. However, we urgently need technologists, policy-makers and others to understand that the exclusive focus on fairness and transparency will not get us closer to building better systems. Fairness is too vague and open to interpretation. Transparency requires visibility into the whole system, not just on paper, but as it is running. None of these seem within our grasp and we must look to alternative ways of determining how our systems ought to behave. Relying on fairness is not fair.