Search

Content type: News & Analysis

We’ve been warning for a while now about the risks of AI Assistants. Are these assistants designed for us or to exploit us?The answer to that question hinges on whether the firms building these tools are considering security and privacy from the outset. The initial launches over the last couple of years were not promising.Now with OpenAI’s agent launch, users deserve to know whether these firms are considering these risks and designing their service for people in the real world. The OpenAI…

Content type: Advocacy

The Open informal consultations on lethal autonomous weapons systems, held in accordance with General Assembly resolution 79/62 at the UN in New York on 12-13 May 2025, examined various legal, humanitarian, security, technological, and ethical aspects of these weapons. These consultations aimed to broaden the scope of AWS discussions beyond those held by the Group of Governmental Experts (GGE) at the UN in Geneva. Find out more about what happened during the discussions at Researching Critical…

Content type: Advocacy

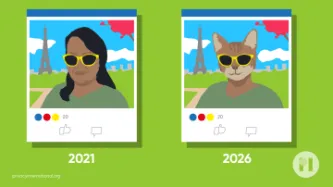

In the wake of Privacy International’s (PI) campaign against the unfettered use of Facial Recognition Technology in the UK, MPs gave inadequate responses to concerns raised by members of the public about the roll-out of this pernicious mass-surveillance technology in public spaces. Their responses also sidestep calls on them to take action.The UK is sleepwalking towards the end of privacy in public. The spread of insidious Facial Recognition Technology (FRT) in public spaces across the country…

Content type: Long Read

IntroductionIn early October this year, Google announced its AI Overviews would now have ads. AI companies have been exploring ways to monetise their AI tools to compensate for their eye watering costs, and advertising seems to be a part of many of these plans. Microsoft have even rolled out an entire Advertising API for its AI chat tools.As AI becomes a focal point of consumer tech, the next host of the AdTech expansion regime could well be the most popular of these AI tools: AI chatbots.…

Content type: Examples

The UK's Department of Education intends to appoint a project team to test edtech against set criteria to choose the highest-quality and most useful products. Extra training will be offered to help teachers develop enhanced skills. Critics suggest it would be better to run a consultation first to work out what schools and teachers want.Link to article Publication: Schools WeekWriter: Lucas Cumiskey

Content type: Examples

The UK's new Labour government are giving AI models special access to the Department of Education's bank of resources in order to encourage technology companies to create better AI tools to reduce teachers' workloads. A competition for the best ideas will award an additional £1 million in development funds. Link to article Publication: GuardianWriter: Richard Adams

Content type: Examples

The Utah State Board of Education has approved a $3 million contract with Utah-based AEGIX Global that will let K-12 schools in the state apply for funding for AI gun detection software from ZeroEyes for up to four cameras per school. The software will work with the schools' existing camera systems, and notifies police when the detection of a firearm is verified at the ZeroEyes control centre. The legislature will consider additional funding if the early implementation is successful. The…

Content type: Long Read

The fourth edition of PI’s Guide to International Law and Surveillance provides the most hard-hitting past and recent results on international human rights law that reinforce the core human rights principles and standards on surveillance. We hope that it will continue helping researchers, activists, journalists, policymakers, and anyone else working on these issues.The new edition includes, among others, entries on (extra)territorial jurisdiction in surveillance, surveillance of public…

Content type: Explainer

Behind every machine is a human person who makes the cogs in that machine turn - there's the developer who builds (codes) the machine, the human evaluators who assess the basic machine's performance, even the people who build the physical parts for the machine. In the case of large language models (LLMs) powering your AI systems, this 'human person' is the invisible data labellers from all over the world who are manually annotating datasets that train the machine to recognise what is the colour…

Content type: Explainer

IntroductionThe emergence of large language models (LLMs) in late 2022 has changed people’s understanding of, and interaction with, artificial intelligence (AI). New tools and products that use, or claim to use, AI can be found for almost every purpose – they can write you a novel, pretend to be your girlfriend, help you brush your teeth, take down criminals or predict the future. But LLMs and other similar forms of generative AI create risks – not just big theoretical existential ones – but…

Content type: News & Analysis

Is the AI hype fading? Consumer products with AI assistant are disappointing across the board, Tech CEOs are struggling to give examples of use cases to justify spending billions into Graphics Processing Units (GPUs) and models training. Meanwhile, data protection concerns are still a far cry from having been addressed.

Yet, the believers remain. OpenAI's presentation of ChatGPT was reminiscent of the movie Her (with Scarlett Johannsen's voice even being replicated a la the movie), Google…

Content type: Advocacy

Privacy International (PI) welcomes the opportunity to provide input to the forthcoming report the Special Rapporteur on contemporary forms of racism, racial discrimination, xenophobia and related tolerance to the 56th session of Human Rights Council which will examine and analyse the relationship between artificial intelligence (AI) and non-discrimination and racial equality, as well as other international human rights standards.AI applications are becoming a part of everyday life:…

Content type: Advocacy

AI-powered employment practices: PI's response to the ICO's draft recruitment and selection guidance

The volume of data collected and the methods to automate recruitment with AI poses challenges for the privacy and data protection rights of candidates going through the recruitment process.Recruitment is a complex and multi-layered process, and so is the AI technology intended to service this process at one or all stages of it. For instance, an AI-powered CV-screening tool using natural language processing (NLP) methods might collect keyword data on candidates, while an AI-powered video…

Content type: Advocacy

Why the EU AI Act fails migration

The EU AI Act seeks to provide a regulatory framework for the development and use of the most ‘risky’ AI within the European Union. The legislation outlines prohibitions for ‘unacceptable’ uses of AI, and sets out a framework of technical, oversight and accountability requirements for ‘high-risk’ AI when deployed or placed on the EU market.

Whilst the AI Act takes positive steps in other areas, the legislation is weak and even enables dangerous systems in the…

Content type: Press release

9 November 2023 - Privacy International (PI) has just published new research into UK Members of Parliament’s (startling lack of) knowledge on the use of Facial Recognition Technology (FRT) in public spaces, even within their own constituencies. Read the research published here in full: "MPs Asleep at the Wheel as Facial Recognition Technology Spells The End of Privacy in Public".PI has recently conducted a survey of 114 UK MPs through YouGov. Published this morning, the results are seriously…

Content type: Advocacy

We submitted a report to the Commission of Jurists on the Brazilian Artificial Intelligence Bill focussed on highlighting the potential harms associated with the use of AI within schools and the additional safeguards and precautions that should be taken when implementing AI in educational technology.The use of AI in education technology and schools has the potential to interfere with the child’s right to education and the right to privacy which are upheld by international human rights standards…

Content type: News & Analysis

What if we told you that every photo of you, your family, and your friends posted on your social media or even your blog could be copied and saved indefinitely in a database with billions of images of other people, by a company you've never heard of? And what if we told you that this mass surveillance database was pitched to law enforcement and private companies across the world?

This is more or less the business model and aspiration of Clearview AI, a company that only received worldwide…

Content type: News & Analysis

Last month, the World Health Organization published its guidance on Ethics and Governance of Artificial Intelligence for Health. Privacy International was one of the organisations that was tasked with reviewing the report. We want to start by acknowledging that this report is a very thorough one that does not shy away from acknowledging the risks and limitations of the use of AI in healthcare. As it is often the case with guidance notes of this kind, its effectiveness will depend on the…

Content type: Examples

A growing number of companies - for example, San Mateo start-up Camio and AI startup Actuate, which uses machine learning to identify objects and events in surveillance footage - are repositioning themselves as providers of AI software that can track workplace compliance with covid safety rules such as social distancing and wearing masks. Amazon developed its own social distancing tracking technology for internal use in its warehouses and other buildings, and is offering it as a free tool to…

Content type: Examples

After governments in many parts of the world began mandating wearing masks when out in public, researchers in China and the US published datasets of images of masked faces scraped from social media sites to use as training data for AI facial recognition models. Researchers from the startup Workaround, who published the COVID19 Mask image Dataset to Github in April 2020 claimed the images were not private because they were posted on Instagram and therefore permission from the posters was not…

Content type: Examples

Researchers are scraping social media posts for images of mask-covered faces to use to improve facial recognition algorithms. In April, researchers published to Github the COVID19 Mask Image Dataset, which contains more than 1,200 images taken from Instagram; in March, Wuhan researchers compiled the Real World Masked Face Dataset, a database of more than 5,000 photos of 525 people they found online. The researchers have justified the appropriation by saying images posted to Instagram are public…

Content type: Examples

Many of the steps suggested in a draft programme for China-style mass surveillance in the US are being promoted and implemented as part of the government’s response to the pandemic, perhaps due to the overlap of membership between the National Security Commission on Artificial Intelligence, the body that drafted the programme, and the advisory task forces charged with guiding the government’s plans to reopen the economy. The draft, obtained by EPIC in a FOIA request, is aimed at ensuring that…

Content type: Long Read

Over the last two decades we have seen an array of digital technologies being deployed in the context of border controls and immigration enforcement, with surveillance practices and data-driven immigration policies routinely leading to discriminatory treatment of people and undermining peoples’ dignity.And yet this is happening with little public scrutiny, often in a regulatory or legal void and without understanding and consideration to the impact on migrant communities at the border and…

Content type: Long Read

What Do We Know?

Palantir & the NHS

What You Don’t Know About Palantir in the UK

Steps We’re Taking

The Way Forward

This article was written by No Tech For Tyrants - an organisation that works on severing links between higher education, violent tech & hostile immigration environments.

Content type: Long Read

In April 2018, Amazon acquired “Ring”, a smart security device company best known for its video doorbell, which allows Ring users to see, talk to, and record people who come to their doorsteps.

What started out as a company pitch on Shark Tank in 2013, led to the $839 million deal, which has been crucial for Amazon to expand on their concept of the XXI century smart home. It’s not just about convenience anymore, interconnected sensors and algorithms promise protection and provide a feeling of…

Content type: News & Analysis

Yesterday, Amazon announced that they will be putting a one-year suspension on sales of its facial recognition software Rekognition to law enforcement. While Amazon’s move should be welcomed as a step towards sanctioning company opportunism at the expense of our fundamental freedoms, there is still a lot to be done.

The announcement speaks of just a one-year ban. What is Amazon exactly expecting to change within that one year? Is one year enough to make the technology to not discriminate…

Content type: Long Read

On 12 April 2020, citing confidential documents, the Guardian reported Palantir would be involved in a Covid-19 data project which "includes large volumes of data pertaining to individuals, including protected health information, Covid-19 test results, the contents of people’s calls to the NHS health advice line 111 and clinical information about those in intensive care".

It cited a Whitehall source "alarmed at the “unprecedented” amounts of confidential health information being swept up in the…

Content type: Press release

Photo by Ashkan Forouzani on Unsplash

Today Privacy International, Big Brother Watch, medConfidential, Foxglove, and Open Rights Group have sent Palantir 10 questions about their work with the UK’s National Health Service (NHS) during the Covid-19 public health crisis and have requested for the contract to be disclosed.

On its website Palantir says that the company has a “culture of open and critical discussion around the implications of [their] technology” but the company have so far…

Content type: Examples

Russia has set up a coronavirus information centre to to monitor social media for misinformation about the coronavirus and spot empty supermarket shelves using a combination of surveillance cameras and AI. The centre also has a database of contacts and places of work for 95% of those under mandatory quarantine after returning from countries where the virus is active. Sherbank, Russia's biggest bank, has agreed to pay for a free app that will provide free telemedicine consultations.

Source:…

Content type: News & Analysis

In mid-2019, MI5 admitted, during a case brought by Liberty, that personal data was being held in “ungoverned spaces”. Much about these ‘ungoverned spaces’, and how they would effectively be “governed” in the future, remained unclear. At the moment, they are understood to be a ‘technical environment’ where personal data of unknown numbers of individuals was being ‘handled’. The use of ‘technical environment’ suggests something more than simply a compilation of a few datasets or databases.

The…