Advanced Search

Content Type: Frequently Asked Questions

Privacy is a fundamental right and shouldn’t be a luxury. But if you have a cheap phone, your data might be at risk.

Content Type: Explainer

Abstract

Over the past few years, smart phones have become incredibly inexpensive, connecting millions of people to the internet for the first time. While growing connectivity is undeniably positive, some device vendors have recently come under scrutiny for harvesting user data and invasive private data collection practices.

Due to the open-source nature of the Android operating system vendors can add pre-installed apps (often called “bundled apps” or "bloatware") to mobile phones.…

Content Type: Video

Christopher Weatherhead and Eva Blum-Dumontet Discuss the finding of Privacy International's report on the MyPhone MYA2 from the Philippines

Content Type: App Analysis

The following is the output from Pinoy of Exodus Standalone, by Exodus Privacy

{

"application": {

"name": "Pinoy",

"libraries": [],

"handle": "com.zed.pinoy",

"version_name": "4.19",

"uaid": "D850D2DCD60B3482C1012D8DCE0382CF7D66AEB6",

"permissions": [

"android.permission.READ_PHONE_STATE",

"android.permission.INTERNET",

"android.permission.ACCESS_NETWORK_STATE",

"android.permission.WRITE_EXTERNAL_STORAGE",

"android.permission.…

Content Type: App Analysis

The following is the output from MyPhone Registration of Exodus Standalone, by Exodus Privacy

{

"trackers": [],

"apk": {

"path": "/media/transfer/AndroidAnaylsis/Library/OriginalAPKs/MyPhoneRegistration.apk",

"checksum": "584fb7efe352024b52e2584de6afd6944d5bdf038c6459200c5e4a021d3f096a"

},

"application": {

"libraries": [],

"version_code": "1",

"permissions": [

"android.permission.DISABLE_KEYGUARD",

"android.permission.RECEIVE_BOOT_COMPLETED…

Content Type: App Analysis

The following is the output from Facebook Lite of Exodus Standalone, by Exodus Privacy

{

"trackers": [],

"apk": {

"checksum": "8cf800fbe1626468b7af1f3b59dae657f22f0a9fb3070b80122af6171df67689",

"path": "/media/transfer/AndroidAnaylsis/Queue/com.facebook.lite.apk"

},

"application": {

"name": "Lite",

"handle": "com.facebook.lite",

"uaid": "79CC550EE0002725D1108B4580200A40D6AFA2FD",

"version_name": "49.0.0.10.69",

"version_code": "63889098",

"…

Content Type: App Analysis

The following is the output from Brown Portal of Exodus Standalone, by Exodus Privacy

{

"trackers": [],

"apk": {

"path": "/media/transfer/AndroidAnaylsis/Library/OriginalAPKs/BrownPortal.apk",

"checksum": "154622e8812f2db94bf717fc4aef29a5a24569b5940e7640915cf5958acd4ad9"

},

"application": {

"name": "Brown Portal",

"version_name": "1.1.2",

"permissions": [

"android.permission.WRITE_EXTERNAL_STORAGE",

"android.permission.INTERNET",

"android.…

Content Type: Advocacy

Earlier this year, the UN Special Rapporteur on freedom of expression called for an “immediate moratorium on the global sale and transfer of private surveillance technology”, warning that “unlawful surveillance continues without evident constraint”, is leading to arbitrary detention, torture and possibly to extrajudicial killings. Without urgent action, there is every reason to believe that the situation will only get worse for people around the world. At Privacy…

Content Type: Report

In September 2019, Privacy International filed 10 access to documents requests to EU bodies regarding the transfer of surveillance capabilities to non-EU countries. The requests seek documents providing information on the transfer of personal data, surveillance technology, training, financing, and legislation to non-EU countries. The requests were submitted to:

Frontex

Europol

The European Union Agency for Law Enforcement Training

The Directorate-General for Economic and…

Content Type: News & Analysis

Photo: The European Union

“Border Externalisation”, the transfer of border controls to foreign countries, has in the last few years become the main instrument through which the European Union seeks to stop migratory flows to Europe. Similar to the strategy being implemented under Trump’s administration, it relies on utilising modern technology, training, and equipping authorities in third countries to export the border far beyond its shores.

It is enabled by the adoption…

Content Type: Key Resources

Content Type: News & Analysis

Photo by Francesco Bellina

The wars on terror and migration have seen international funders sponsoring numerous border control missions across the Sahel region of Africa. Many of these rely on funds supposed to be reserved for development aid and lack vital transparency safeguards. In the first of a series, freelance journalist Giacomo Zandonini sets the scene from Niger.

Surrounded by a straw-yellow stretch of sand, the immense base of the border control mobile company of Maradi, in southern…

Content Type: Long Read

Photo: Francesco Bellina

Driven by the need to never again allow organised mass murder of the type inflicted during the Second World War, the European Union has brought its citizens unprecedented levels of peace underpinned by fundamental rights and freedoms.

It plays an instrumental role in protecting people’s privacy around the world; its data protection regulation sets the bar globally, while its courts have been at the forefront of challenges to unlawful government surveillance…

Content Type: Advocacy

Agreed in 2015, the EU Trust Fund for Africa uses development aid and cooperation funds to manage and deter migration to Europe. It currently funds numerous projects presenting urgent threats to privacy, including developing biometric databases, training security units in surveillance, and equipping them with surveillance equipment. There has been no decision made about the future of the Fund pending the outcome of negotiations on the EU's next budget.

This paper…

Content Type: Advocacy

The Neighbourhood, Development and International Cooperation Instrument (NDICI) is a external instrument proposed under the EU's next 2021-2027 budget. It will provide funding for surveillance, border security, and migration management projects in third countries currently undertaken by a number of projects across various funds which are to form part of the NDICI. Several raise significant concerns regarding the right to privacy.

This paper summarises the NDICI and provides a…

Content Type: Advocacy

This report, which was authored by Lorand Laskai, who is a JD Candidate at Yale Law School, provides an overview of the surveillance technology and training that the Chinese government supplies to countries around the world. China, European countries, Israel, the US, and Russia, are all major providers of such surveillance worldwide, as are multilateral organisations such as the European Union. Countries with the largest defence and security sectors are transferring technology and…

Content Type: Advocacy

The UK government's Department for Digital, Culture, Media and Sport, along with Government Digital Services, made a Call for Evidence on Digital Identity.

In our response, Privacy International reiterated the need for a digital identity system to have a clear purpose, in this case to enable people to prove their identity online to access services. We highlighted the dangers of some forms of ID (like relying on a centralised database, unique ID numbers, or the use of biometrics). The…

Content Type: Examples

A new investigative report from Sharona Coutts at Rewire exposed how anti-choice groups, including at least one adoption agency, were resorting to using a technology called "geofencing" to find and then target individuals they believe are considering abortion, with targeted ads.

Two groups, a clearinghouse that operates many crisis pregnancy centers and a large adoption agency called Bethany Christian Services, were reported to have hired Copley Advertising to provide them with this…

Content Type: Examples

Ahead of the Irish referendum to amend the Constitutions of Ireland to allow the parliament to legislative for abortion which took place in May 2018, Google decided to stop all advertising relating to the referendum on all of its advertising platforms, including AdWords and YouTube.

This followed decisions by Facebook to no longer accept advertising relating to the referendum funded by foreign organisations outside Ireland, and Twitter not allowing any advertising in relation to the…

Content Type: News & Analysis

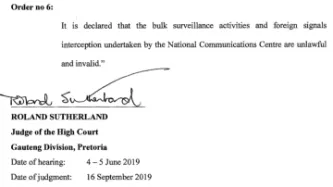

Today, the High Court of South Africa in Pretoria in a historic decision declared that bulk interception by the South African National Communications Centre is unlawful and invalid.

The judgment is a powerful rejection of years of secret and unchecked surveillance by South African authorities against millions of people - irrespective of whether they reside in South Africa.

The case was brought by two applicants, the amaBhungane Centre for Investigative Journalism and journalist Stephen…

Content Type: Examples

Bethany Christian Services, an international pregnancy support and adoption agency, is launching a programme with Copley Advertising to send targeted ads to individuals visiting Planned Parenthood clinics, abortion clinics, methadone clinics and high-risk areas (AHPA). The targeting will be done through the use of geo fencing based on smartphones located within the selected locations.

Source: https://www.liveaction.org/news//adoption-agency-send-pro-life-ads-smartphones-inside-abortion-…

Content Type: News & Analysis

Photo by Sharon McCutcheon on Unsplash

In May, the United Nations Special Rapporteur on extreme poverty and human rights, Philip Alston invited all interested governments, civil society organisations, academics, international organisations, activists, corporations and others, to provide written input for his thematic report on the human rights impacts, especially on those living in poverty, of the introduction of digital technologies in the implementation of national social protection…

Content Type: Long Read

We found this image here.

Using Facebook, Google, and Twitter’s ad libraries, PI has tried to understand how political ads are targeted in the UK. This information – which should be very clear on political ads – is instead being squirreled away under multiple clicks and confusing headings.

Importantly, in most countries around the world, users cannot understand why they’re being targeted with political ads on these platforms at all. This is because Facebook, Google, and Twitter have taken…

Content Type: News & Analysis

Photo: The European Union

On 2 September 2019, Privacy International, together with 60 other organisations, signed an open letter to the European Parliament to express our deep concern about upcoming EU policy proposals which undermine the EU’s founding values of human rights, peace and disarmament.

Since 2017, the EU has diverted funds towards security research and security capacity-building in countries around the world. The proposal for the EU's next budget (2021-2027) will…

Content Type: Examples

Denmark released 32 prisoners as part of an ongoing review of 10,700 criminal cases, after serious questions arose regarding the reliability of geolocation data obtained from mobile phone operators. Among the various problems with the software used to convert the phone data into usable evidence, it was found that the system connected the phones to several towers at once, sometimes hundreds of kilometres apart, recorded the origins of text messages incorrectly and got the location of specific…

Content Type: Report

“...a mobile device is now a huge repository of sensitive data, which could provide a wealth of information about its owner. This has in turn led to the evolution of mobile device forensics, a branch of digital forensics, which deals with retrieving data from a mobile device.”

The situation in Scotland regarding the use of mobile phone extraction has come a long way since the secret trials were exposed. The inquiry by the Justice Sub-Committee, commenced on 10 May 2018, has brought much…

Content Type: News & Analysis

The global counter-terrorism agenda is driven by a group of powerful governments and industry with a vested political and economic interest in pushing for security solutions that increasingly rely on surveillance technologies at the expenses of human rights.

To facilitate the adoption of these measures, a plethora of bodies, groups and networks of governments and other interested private stakeholders develop norms, standards and ‘good practices’ which often end up becoming hard national laws…

Content Type: Advocacy

Privacy International's submission to the consultation initiated by the UN Special Rapporteur on counter-terrorism and human rights on the impact on human rights of the proliferation of “soft law” instruments and related standard-setting initiatives and processes in the counter-terrorism context.

In this submission Privacy International notes its concerns that some of this “soft law” instruments have negative implications on the right to privacy leading to violations of other human…

Content Type: News & Analysis

Photo by Jake Hills on UnsplashOur research has shown how some apps like Maya by Plackal Tech and MIA by Mobbap Development Limited were – at the time of the research – sharing your most intimate data about your sexual life and medical history with Facebook.Other apps like Mi Calendario, Ovulation Calculator by Pinkbird and Linchpin Health were letting Facebook know every time you open the app.We think companies like theses should do better and we are pleased to see some of them have already…