Real-time facial recognition should never be coupled with body-worn cameras

Citing inaccuracy, major manufacturer declines to combine facial recognition and body worn cameras - but accurate or not, real-time facial recognition should never be coupled with police body-worn cameras

Axon Enterprise Inc.—a major manufacturer of police body-worn cameras and digital evidence management systems that also partners with Microsoft to provide services to law enforcement—announced that the company would refrain from equipping police body-worn cameras with facial recognition technology—for now.

The announcement came in response to recommendations issued by Axon’s independent AI & Policing Technology Ethics Board: it concluded facial recognition is:

“not currently reliable enough to ethically justify its use on body-worn cameras” and that such use should not occur “until the technology performs with far greater accuracy and performs equally well across races, ethnicities, genders, and other identity groups.”

But concerns relating to the integration of face recognition with body-worn cameras go beyond issues of accuracy. This capability would subvert the purpose of body-worn cameras as a tool of police accountability and transparency by turning them into a tool for mass surveillance.

Axon’s CEO Rick Smith told Wired that he is confident problems with accuracy and bias “will get solved over time,” and the Ethics Board report encourages investment in research to make training data more representative. But even if facial recognition could be completely accurate and non-discriminatory, we believe that body-worn cameras should never have real-time facial recognition capabilities.

Why body worn cameras should never have real-time facial recognition capabilities

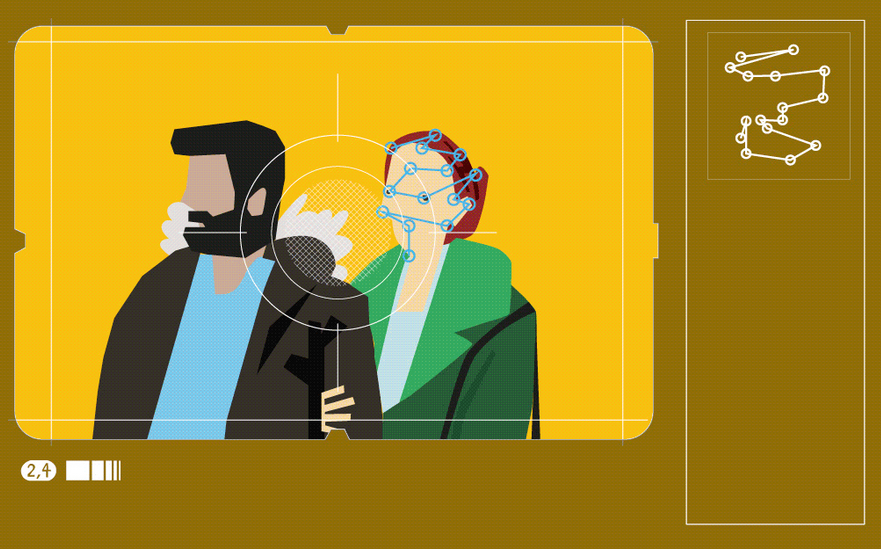

Combining facial recognition with body-worn cameras creates an impermissibly intrusive form of surveillance technology. Our faces are central to who we are, we cannot readily mask or obscure them, and there are many risks to cataloguing our faces and the expressions we make in databases that can be stored and mined indefinitely. As people move through public spaces or interact with police, they do not anticipate or expect that their faces will be converted into a biometric map, so that their facial features can be recorded, catalogued, and analysed, without their awareness or consent.

The fact that peoples’ faces may be visible as they navigate public spaces does not negate the privacy and other human rights violations that would accompany the coupling of facial recognition with body-worn cameras. According to research by the Electronic Frontier Foundation, “[p]eople are much less likely to seek help from the police if they know or suspect . . . that they can be identified in real time or in the future.” People may be deterred not only from approaching police in the first place, but also from entering public spaces or attending events where police may be present or patrolling. People may be discouraged from expressing themselves, associating with others, or organising to petition the government for change.

Thus, even if facial recognition were perfectly accurate, combining that technology with police body-worn cameras would lead to violations of peoples’ right to privacy and other fundamental rights and freedoms that privacy supports, and it would contribute to shrinking the civic spaces necessary for exercising our rights and freedoms and engaging in democratic participation and dissent.

It is important to underscore that it is purely hypothetical to imagine that facial recognition could be perfectly accurate, because there are inherent problems with how facial recognition software is designed and trained and how we collect, label, and classify the data it is fed. The problematic process of seeking to make this technology free of false positives and false negatives would inevitably come at the expense of people in vulnerable situations, who we should not force to endure the consequences of having this technology trialled against them. In simply seeking to make facial recognition more accurate, we would further replicate and entrench existing discrimination and inequalities.

What You Can Do – Our Neighbourhood Watched Campaign

Axon’s Ethics Board affirms that:

[n]o jurisdiction should adopt face recognition technology without going through open, transparent, democratic processes, with adequate opportunity for genuinely representative public analysis, input, and objection.

We agree. As we have stated in our Neighbourhood Watched campaign, police and governments around the world regularly fail to ensure that if new technology is rolled out, it is done in a way that is transparent and accountable. This includes facial recognition technology, but also a whole range of concerning surveillance tech.

To protect people’s rights and prevent against abuses, it is vital for the public to have a meaningful say in whether their local police force should be allowed to use such highly intrusive technologies. We believe these technologies should not be bought or used without robust and transparent public consultation and the approval of locally elected representatives. These consultative processes should also centre the voices of people most adversely impact by such technologies and their use, including “survivors of mass incarceration, survivors of law enforcement harm and violence, and community members who live closely among both populations.”

If you’re in the UK, you can take two minutes to write to your local Police and Crime Commissioner to tell them how you feel about police surveillance of your community, and download our campaign pack to help you organise as a community.

If you’re in the US, you can learn more about policing technology and what you can do about it from our friends at the EFF, here.

If you’re anywhere else in the world and want to raise awareness about threats to your community by using our campaign materials, get in touch with us at [email protected].