New Year, New Noom? What our re-testing of the weight-loss platform taught us

Following its 2021 research on diet ads sharing personal data with third parties, PI ran a follow-up analysis of Noom's online test to assess changes and reflect on publishers' data sharing practices.

- PI's original research on diet ads online test found sharing of personal health data with third parties

- Noom was one of three companies providing diet programmes whose data processing practices we researched in 2021

- Taking our 2021 research as starting point, we re-tested the Noom platform

- While it took them longer than the other sites tested and while there is still room for improvement, significant positive changes have taken place

- This shows that privacy-preserving measures can be taken without compromising the product or service provided

In August 2021, PI published the report An unhealthy diet of targeted ads where we uncovered how personal data was shared by diet companies through their online ads and online testing. Our findings were quite grim, with highly sensitive personal data shared with third parties without consent.

Following this initial report, we performed follow-up research with the same methodology and by September 2021 we reported a number of positive changes from two of these websites: BetterMe and VShred. While not perfect, we welcomed these improvements, about which you can read at the top of this page, as they showed acknowledgment, if not understanding, that abusive data sharing practices don't have to be part of a company's strategy for it to succeed.

On the other hand Noom, a startup funded in 2007 that has gone through multiple rounds of million dollars fundraising, had not implemented any positive change at the time of re-test. This was particularly concerning given Noom was the company with the highest public profile among those tested. A year later, it's time to re-run our original research and take stock of what has, and hasn't, changed.

Our initial - and worrying - findings

First, a quick reminder of what Privacy International found in our original research. If you've read our original research, you can go straight to the next section!

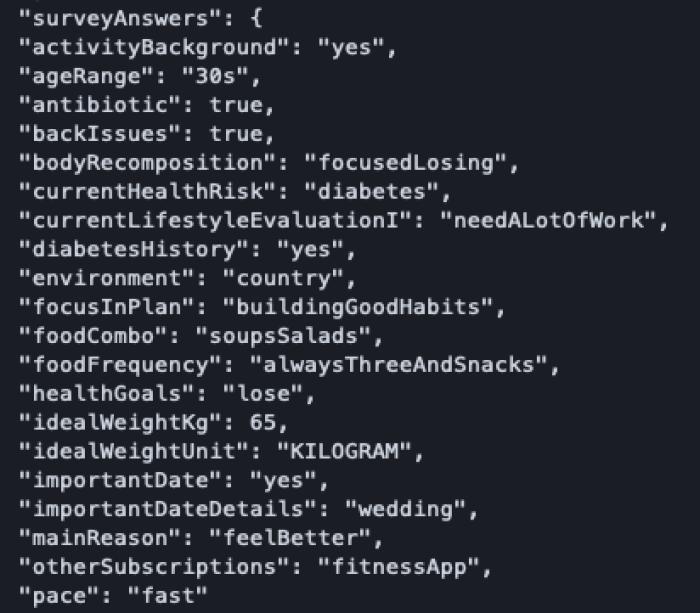

Within its online test, which aims at helping future customers assess how Noom can help them, Noom collects a number of personal health information. This includes height, weight, target weight, gender, age range, race, reasons to want to lose weight, physical activity limitations, dietary restrictions and food allergies, how you feel about Cognitive Behavioural Therapy but also health conditions like diabetes, NASH, depression and more.

What we found through our technical analysis and subsequent Data Subject Access Request was that Noom was sharing all of this information with a number of third-parties, both in real-time while taking the test but also at a later point in time. Personal data, including all test answers, email address as well as unique identifiers that could be used to cross-reference data and re-identify the user, were shared with Braze, FullStory, Mixpanel and Zendesk.

Noom's Privacy policy was also relatively permissive, as it stated that Noom may share personal data with “business partners”, who may use this data to market their or others’ products to users - yet "business partners" were not defined in policy. It also stated that Noom may combine the data it collects through its website and app with data obtained from third parties, to build enhanced profiles of users to deliver more targeted marketing.

Additionally Noom's online test didn't have a cookie banner, despite placing third party trackers for Bing, Google, Facebook, FullStory, Optimizely, Pinterest, Stripe and LinkedIn

Long story short: sensitive health data was shared with companies on the spot, a data processing activity which the privacy policy failed to mention and that couldn't be avoided by users engaging with the platform. This means the data shared could be re-shared, processed and used for other purpose, without users' knowledge or consent.

A year and a half later: progress hindered by new problems

We re-tested the Noom platform this year by following the exact same methodology. This means completing the Noom online test while using the open-source HTTP toolkit to intercept HTTP(S) requests and analyse traffic between the Noom platform and third parties. We subsequently submitted a Data Subject Access Request to assess whether data was also being shared at a later point in time.

While health data processing has continued, a few changes have taken place.

- Noom now displays a cookie banner powered by OneTrust, however some first party cookies are set by default for Google and Facebook, and remain after rejection of cookies

- When declining to consent, no data is seemingly shared with Mixpanel, nor with Braze, FullStory or Zendesk, neither while taking the test nor later on as confirmed by the DSAR.

While those are great strides in a good direction (we really appreciate seing so little requests to third parties), we unfortunately uncovered new problematic behaviors and some issues seem unresolved:

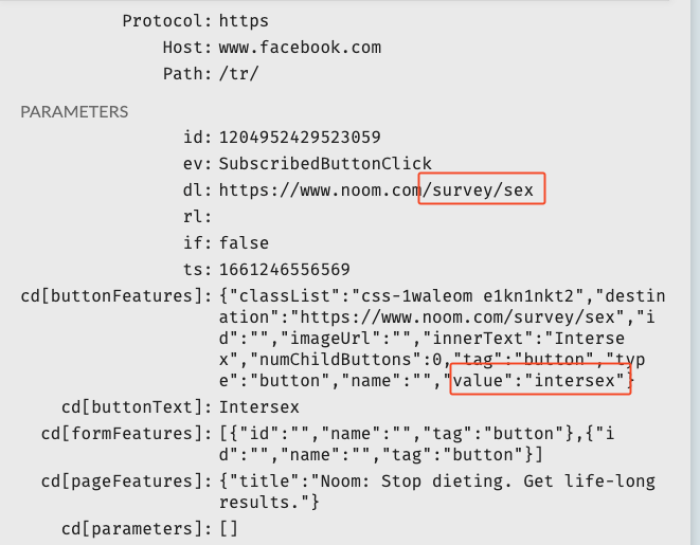

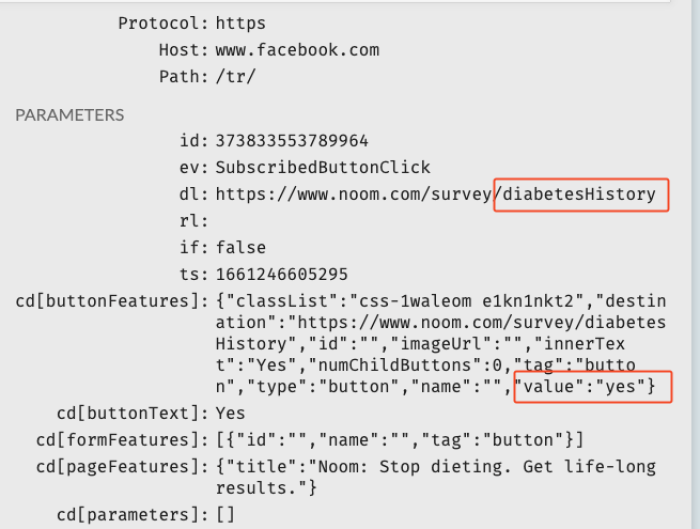

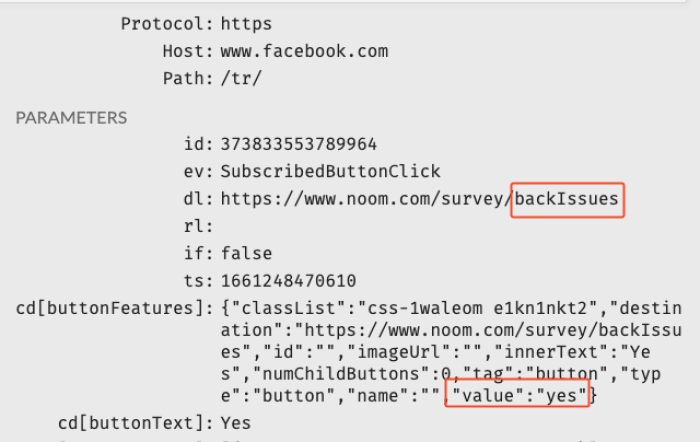

- Most survey answers (including sensitive data such as health information) are now shared with Facebook via a GET request; even though not as many as before; gender/sexual orientation and race not included anymore

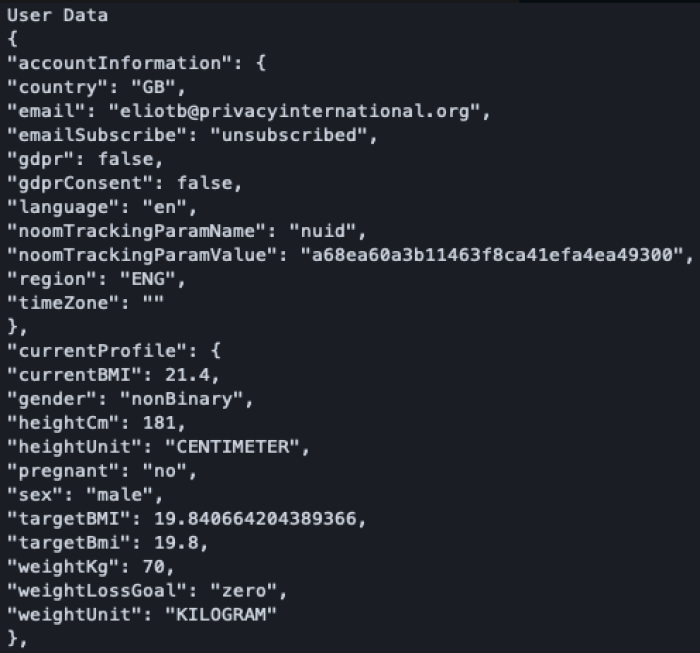

- All of the test answers were shared with Iterable, a marketing platform, despite no notice of this in either Noom’s cookie banner nor in its Privacy Policy. While we didn't observe the data being shared with Iterable when taking the test, the response to our Data Subject Access Request included a file under their name with all our data. The data was organised in JSON format and named USER DATA.

- Cookies from numerous third parties are placed when the user accepts cookies (Bing, LinkedIn, Clarity, ROKT, Pinterest, shop.pe, liadm, Snapchat, Amazon...).

While this behavior is an improvement on the previously observed one, we still don't think sensitive health data should be shared lightly. While we haven't looked at how this data might be shared when accepting cookies, it is possible the same data sharing occurs with other companies as previously observed in our research.

Online tracking by default is not a necessity

This follow-up research came with a few interesting lessons.

First, this is a reminder that platforms continue to share sensitive data with third-parties. Despite GDPR entering into force in 2018 and the numerous complaints filled against such practices, the lack of enforcement means such practices are still very much present. Noom is not the first platform to have done that as exposed by PI in our work on mental health website and period-tracking apps. Nonetheless, invasive data collection shouldn’t be the norm anymore and any business that purports to process personal data should ensure that they're in full compliance with data protection obligations. If not for other reasons, at least because putting consumers' privacy at risk can have a liability cost.

Second, this follow-up shows that change can happen. Similarly to the changes we observed in the sites analysed in our mental health research, once exposed and put under the spotlight, platforms and companies are capable of reviewing their practices for the better.

Finally this follow-up also illustrates that abusive data-sharing practices don't have to be core to the deployment of any online service. Removing data-sharing with third-parties hasn't affected Noom's revenue generation, and if it had, it would mean that their business is selling data, not providing weight loss services. Whether the intentions of the platforms are to exploit users' personal data or because they didn't realise such data-sharing would happen, there is no valid excuse anymore for this sort of practices. In particular for a big company like Noom.

Now as a user of platforms like Noom, the last protection you have is to exercise care when inputting data when you provide health-related services. Degree of caution should be proportionate to the sensitivity of the data provided.

We also suggest you have a read at our guides to protect yourself from online tracking, while they won't prevent any of your personal information from being collected unlawfully, it's a good place to start reclaiming your privacy online!

Noom has been approached to respond to this report before publication, but we have received no response.