Search

Content type: Press release

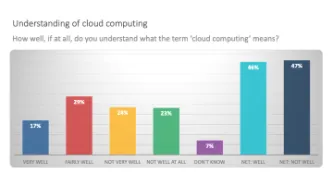

A large number of apps on smart phones store data in the cloud. Law enforcement can access these vast troves of data from devices and from popular apps with the push of a button using cloud extraction technology.

Mobile phones remain the most frequently used and most important digital source for law enforcement investigations. Yet it is not just what is physically stored on the phone that law enforcement are after, but what can be accessed from it, primarily data stored in the Cloud.…

Content type: News & Analysis

Content type: Press release

Following Police Scotland’s announcement that they will be rolling out cyber kiosks to police stations across Scotland. Open Rights Group and Privacy International have released a statement:

Open Rights Group and Privacy International called on Police Scotland to prevent rolling out until the Scottish Government reformed the law to provide an overarching framework for the seizure of electronic devices in Scotland in line with human rights standards. That recommendation has not been heeded by…

Content type: Case Study

Slavery, servitude, and forced labour are absolutely forbidden today, as is anything that seeks to undermine or limit that restriction. The horrific reality, however, is that modern slavery remains a significant global issue.

Human trafficking is one form of modern slavery. It involves the recruitment, harbouring or transporting of people into a situation of exploitation through the use of violence, deception or coercion and forcing them to work against their will.

Human traffickers do…

Content type: Case Study

On 3 December 2015, four masked men in plainclothes arrested Isnina Musa Sheikh in broad daylight (at around 1 p.m.) as she served customers at her food kiosk in Mandera town, in the North East of Kenya, Human Rights Watch reported. The men didn’t identify themselves but they were carrying pistols and M16 assault rifles, commonly used by Kenyan defence forces and the cars that took her away had their insignia on the doors. Isnina’s body was discovered three days later in a shallow grave about…

Content type: Case Study

The prohibition against torture is absolute. There are no exceptional circumstances whatsoever which can be used to justify torture.

And yet, torture is still being carried out by state officials around the world, driven by states’ ability to surveil dissidents, and intercept their communications.

In 2007, French technology firm Amesys (a subsidiary of Bull) supplied sophisticated communications surveillance systems to the Libyan intelligence services. The systems allegedly permitted the…

Content type: Advocacy

Privacy International (PI) welcomes the opportunity to respond to this consultation. Established in 1990, PI is a non-profit organisation based in London, dedicated to defending the right to privacy around the world.

The right to privacy is one of the precedents used to establish reproductive rights, and it is established by several international and regional legal instruments. The primary link between the two stems from the fact that laws and policies which impede upon individuals’ rights to…

Content type: News & Analysis

In the run up to the UK General Election on 12 December 2019, Privacy International, joined by other organisations called on political parties to come clean about their use of data. The lack of response to these demands combined with other evidence gathered by groups during the run up to the election demonstrates that current regulations are not fit for the digital era.

This briefing to which Privacy International contributed together with Demos, the Computational Propaganda Project at the…

Content type: Long Read

Over the coming months, PI is going to start to look a bit different. We will have a new logo and a whole new visual identity.

And in turn, our new visual identity will only be one step in a wider process of PI reconnecting with our core mission and communicating it more effectively to you, following on from extensive consultation with our staff, board, our supporters and our international partners.

Our current black ‘redacted’ Privacy International logo, and the austere Cold War era dossier…

Content type: Case Study

In the Xingjiang region of Western China, surveillance is being used to facilitate the government’s persecution of 8.6million Uighur Muslims.

Nurjamal Atawula, a Uighur woman, described how, in early 2016, police began regularly searching her home and calling her husband into the police station, as a result of his WeChat activity.

WeChat is a Chinese multi-purpose messaging, social media and mobile payment app. As of 2013, it was being used by around 1million Uighurs, but in 2014 WeChat was…

Content type: Case Study

The way digital technologies are deployed has radically influenced the distribution of power in our societies. Digital information can be created, transmitted, stored and distributed far more easily and cheaply than ever before, on a scale that was previously unimaginable. This information is power in the hands of those that know how to use it. Governments and companies alike have been racing to occupy as much space as possible, increasing their surveillance and their knowledge about each and…

Content type: News & Analysis

Updated January 18th 2021

The Government of Myanmar is pushing ahead with plans to require anyone buying a mobile SIM card to be fingerprinted and hand over their ID cards, according to procurement documents circulated to prospective bidders.

The plans are a serious threat to privacy in a country lacking any data protection or surveillance laws and where minorities are systematically persecuted, and must be scrapped.

According to technical requirements developed by Myanmar’s Post and…

Content type: Landing Page

We campaign for a world where technology will empower and enable us, not exploit our data for profit and power. This involves uncovering and researching misuse of our data, and shining a light on how technologies are being used to track, monitor, profile and manipulate us.

Content type: Advocacy

This stakeholder report is a submission by Privacy International (PI), the National Coalition of Human Rights Defenders Kenya (NCHRD-K), The Kenya Legal & Ethical Issues Network on HIV and AIDS (KELIN), and Paradigm Initiative.

PI, NCHRD-K, KELIN, and Paradigm Initiative wish to bring their concerns about the protection and promotion of the right to privacy, and other rights and freedoms that privacy supports, for consideration in Kenya’s upcoming review at the 35th session of the Working…

Content type: Long Read

Following a series of FOI requests from Privacy International and other organisations, the Department of Health and Social Care has now released its contract with Amazon, regarding the use of NHS content by Alexa, Amazon’s virtual assistant. The content of the contract is to a big extent redacted, and we contest the Department of Health’s take on the notion of public interest.

Remember when in July this year the UK government announced a partnership with Amazon so that people would now…

Content type: Long Read

Recently the role of social media and search platforms in political campaigning and elections has come under scrutiny. Concerns range from the spread of disinformation, to profiling of users without their knowledge, to micro-targeting of users with tailored messages, to interference by foreign entities, and more. Significant attention has been paid to the transparency of political ads, and more broadly to the transparency of online ads.

As discussions of potential solutions evolve from banning…

Content type: Advocacy

As we come to the end of 2019, major weaknesses remain with the transparency that all major platforms have so far provided to users. This piece will overview these weaknesses and suggest steps to move forward in 2020.

Tying heightened transparency to "political" ads introduces a variety of problems. For a start, each platform has defined "political" differently, with some having wider definitions and some, incredibly narrow. When an ad is not designated as political, oftentimes it is provided…

Content type: Explainer

PI has long worked on the exploitation of data by companies. We've filed complaints against companies that constantly track you around the internet, we've shown how numerous phone apps share data with Facebook, we've exposed how advertisers track visitors on mental health websites, we've shown how period tracking apps collect and share data of users (including whether they are having unprotected sex or not!), exposed how major tech companies are not providing meaningful transparency to their…

Content type: News & Analysis

This creates a restraint on all people who merely seek to do as people everywhere do: to communicate freely.

This is a particularly worrying development as it builds an unreliable, pervasive, and unnecessary technology on top of an unnecessary and exclusionary SIM card registration policy. Forcing people to register to use communication technology eradicates the potential for anonymity of communications, enables pervasive tracking and communications surveillance.

Building facial recognition…

Content type: News & Analysis

On 24 October 2019, the Swedish government submitted a new draft proposal to give its law enforcement broad hacking powers. On 18 November 2019, the Legal Council (“Lagråd”), an advisory body assessing the constitutionality of laws, approved the draft proposal.

Privacy International believes that even where governments conduct hacking in connection with legitimate activities, such as gathering evidence in a criminal investigation, they may struggle to demonstrate that hacking as…

Content type: Advocacy

As any data protection lawyer and privacy activist will attest, there’s nothing like a well-designed and enforced data protection law to keep the totalitarian tendencies of modern Big Brother in check.

While the EU’s data protection rules aren’t perfect, they at least provide some limits over how far EU bodies, governments and corporations can go when they decide to spy on people.

This is something the bloc’s border control agency, Frontex, learned recently after coming up with a plan to…

Content type: News & Analysis

In the last few months strong concerns have been raised in the UK about how police use of mobile phone extraction dissuades rape survivors from handing over their devices: according to a Cabinet Office report leaked to the Guardian, almost half of rape victims are dropping out of investigations even when a suspect has been identified. The length of time it takes to conduct extractions (with victims paying bills whilst the phone is with the police) and the volume of data obtained by the…

Content type: News & Analysis

Yesterday, we found out that Google has been reported to collect health data records as part of a project it has named “Project Nightingale”. In a partnership with Ascension, Google has purportedly been amassing data for about a year on patients in 21 US states in the form of lab results, doctor diagnoses and hospitalization records, among other categories, which amount to a complete health history, including patient names and dates of birth.

This comes just days after the news of Google'…

Content type: News & Analysis

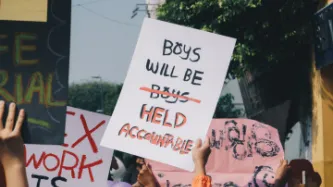

*Photo by Michelle Ding on Unsplash

Pat Finucane was killed in Belfast in 1989. As he and his family ate Sunday dinner, loyalist paramilitaries broke in and shot Pat, a high profile solicitor, in front of his wife and children.

The Report of the Patrick Finucane Review in 2012 expressed “significant doubt as to whether Patrick Finucane would have been murdered by the UDA [Ulster Defence Association] had it not been for the different strands of involvement by the…

Content type: Long Read

Miguel Morachimo, Executive Director of Hiperderecho. Hiperderecho is a non-profit Peruvian organisation dedicated to facilitating public understanding and promoting respect for rights and freedoms in digital environments.The original version of this article was published in Spanish on Hiperderecho's website.Where does our feeling of insecurity come from? As we walk around our cities, we are being observed by security cameras most of the time. Our daily movement, call logs, and internet…

Content type: News & Analysis

Even if we are not Fitbit users, we all need to stop and think about the implications of this merger. There is a reason that our health data is subject to higher levels of protection - its intimate, reveals vast amounts about our everyday lives, and the potential consequences if exploited can be devastating. Google should be keeping its hands off our health data.

Sign our letter to the European Commission, asking them to block the Google/Fitbit merger.

Let's tell Google, 'NOT ON OUR WATCH!'

Content type: Long Read

*Photo by Kristina Flour on Unsplash

The British government needs to provide assurances that MI5’s secret policy does not authorise people to commit serious human rights violations or cover up of such crimes

Privacy International, along Reprieve, the Committee on the Administration of Justice, and the Pat Finucane Centre, is challenging the secret policy of MI5 to authorise or enable its so called “agents” (not MI5 officials) to commit crimes here in the UK.

So far we have discovered…

Content type: Examples

A woman was killed by a spear to the chest at her home in Hallandale Beache, Florida, north of Miami, in July. Witness "Alexa" has been called yet another time to give evidence and solve the mystery. The police is hoping that the smart assistance Amazon Echo, known as Alexa, was accidentally activated and recorded key moments of the murder. “It is believed that evidence of crimes, audio recordings capturing the attack on victim Silvia Crespo that occurred in the main bedroom … may be found on…